Hallo. Wij zijn Luminis Amsterdam!

Onderdeel van een internationale software-en technologiebedrijf met meer dan 200 ervaren professionals.

Luminis Amsterdam is een club waar creativiteit, technologie en data samenkomen. Wij zijn toekomstbouwers die helpen met het bedenken en versnellen van digitale oplossingen voor e-commerce, zorg & welzijn en overheid. Dat doen we door het maken van ervaringen die mensen willen en die organisaties nodig hebben.

Technologie ontwikkelt zich snel. Maar wij gelukkig ook. Want technologie en innovatie is wat we doen. Samen en elke dag weer. We staan in de startblokken om je te helpen.

Daarnaast ondersteunen wij ook bedrijven in het creëren van toegevoegde waarde door de vertaling van technologische ontwikkelingen naar concrete toepassingen en nemen de eindgebruiker als uitgangspunt.

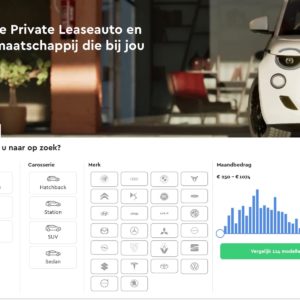

Referenties

Binnen Luminis Amsterdam werken we aan mooie en uitdagende projecten. Bekijk hier enkele voorbeeldcases.

Vacatures

Luminis is altijd op zoek naar getalenteerde en ambitieuze collega’s.

Bekijk onze vacatures en kom eens langs voor een kop koffie.

Kom in contact

Ben je geïnteresseerd in wat wij doen? Heb je een uitdaging waarmee we je kunnen helpen? Neem contact met ons op.

- Suikersilo-West 20, 1165 MP

- Halfweg, Nederland